I have begun doing some work with the Northern New Zealand Seabird Trust who invited me to come and help them with some field work on the Poor Knights Islands. My father had visited the Islands when he worked for DOC in the 1990’s, his stories about the reptile abundance really inspired me to do restoration work, and I jumped at the opportunity to go.

Landing on the Island is notoriously difficult and our first shot at it was delayed, we had to go back to Auckland to wait for better weather. The islands are surrounded by steep cliffs that made European habitation impractical, Māori left the area in the 1820’s. This means the island I visited has never had introduced mammals, not even kiore! I spent days cleaning my gear to get through the biosecurity requirements which are incredibly strict for good reason.

I have explored a few predator free islands including Hauturu / Little Barrier Island which has been described as New Zealand’s most intact ecosystem. However it was only cleared of rats in 2004. When I am photographing invertebrates at night in mainland sanctuaries or forests with predator control (like Tāwharanui Regional Park or parts of the Waitakere Ranges) I see one reptile every eight hours or so. On Hauturu / Little Barrier Island I see them every 20 minutes, but on the Poor Knights it was every two minutes! Bushbird numbers were lower than other islands, I expect this is because reptiles and birds compete over prey species. I wonder if reptile numbers on other islands might be slower to recover because they are preyed on by bushbirds. I reckon that the Poor Knights total reptile and bushbird biomass is much greater than the restored islands I have visited. One reason for this is that reptiles use less energy to hunt than bushbirds but the other reason might be because it has more seabirds.

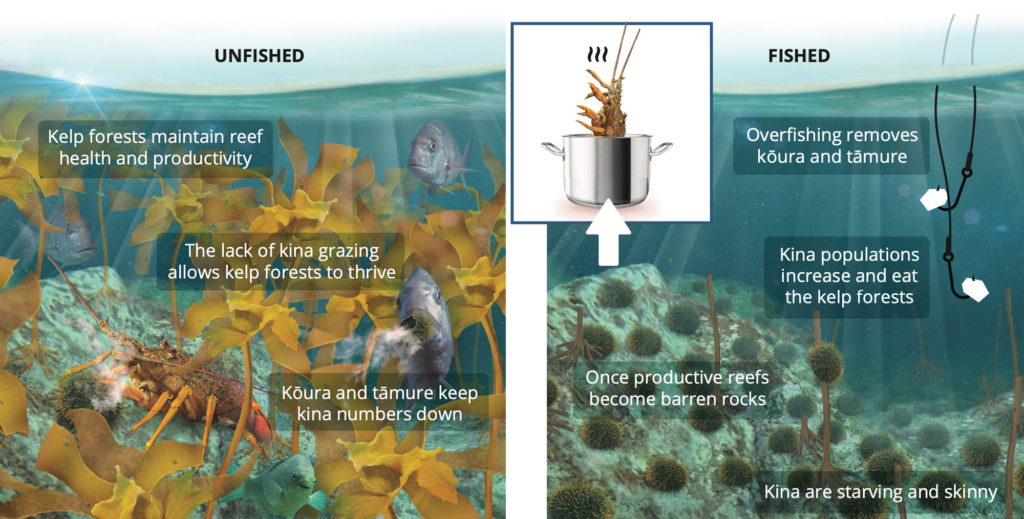

While walking through the bush at night I would sometimes hear a crashing in the canopy followed by a soft thump on the ground. In an incredible navigational feat the seabirds somehow land only meters from their burrows. At night I heard Buller’s shearwater, grey-faced petrel, little penguins and diving petrel (fairy prion finish breeding in February). While monitoring birds at night I was showered with dirt by a Buller’s shearwater who was digging out a burrow. In my short time on the island I saw cave weta and three species of reptile using the burrows. Like a rock forest the burrows add another layer of habitat to the ecosystem. It was incredibly touching to see the care and compassion the researchers had for some of the chicks who were starving while waiting for their parents who often have to travel hundreds of kilometres to find enough food. The chicks who don’t make it die in their burrows and are eaten by many invertebrates, the invertebrates in turn become reptile or bushbird food. The soil on the island looked thick and rich, when it rains nutrients are bought down into the small but famous marine reserve which is teaming with life.

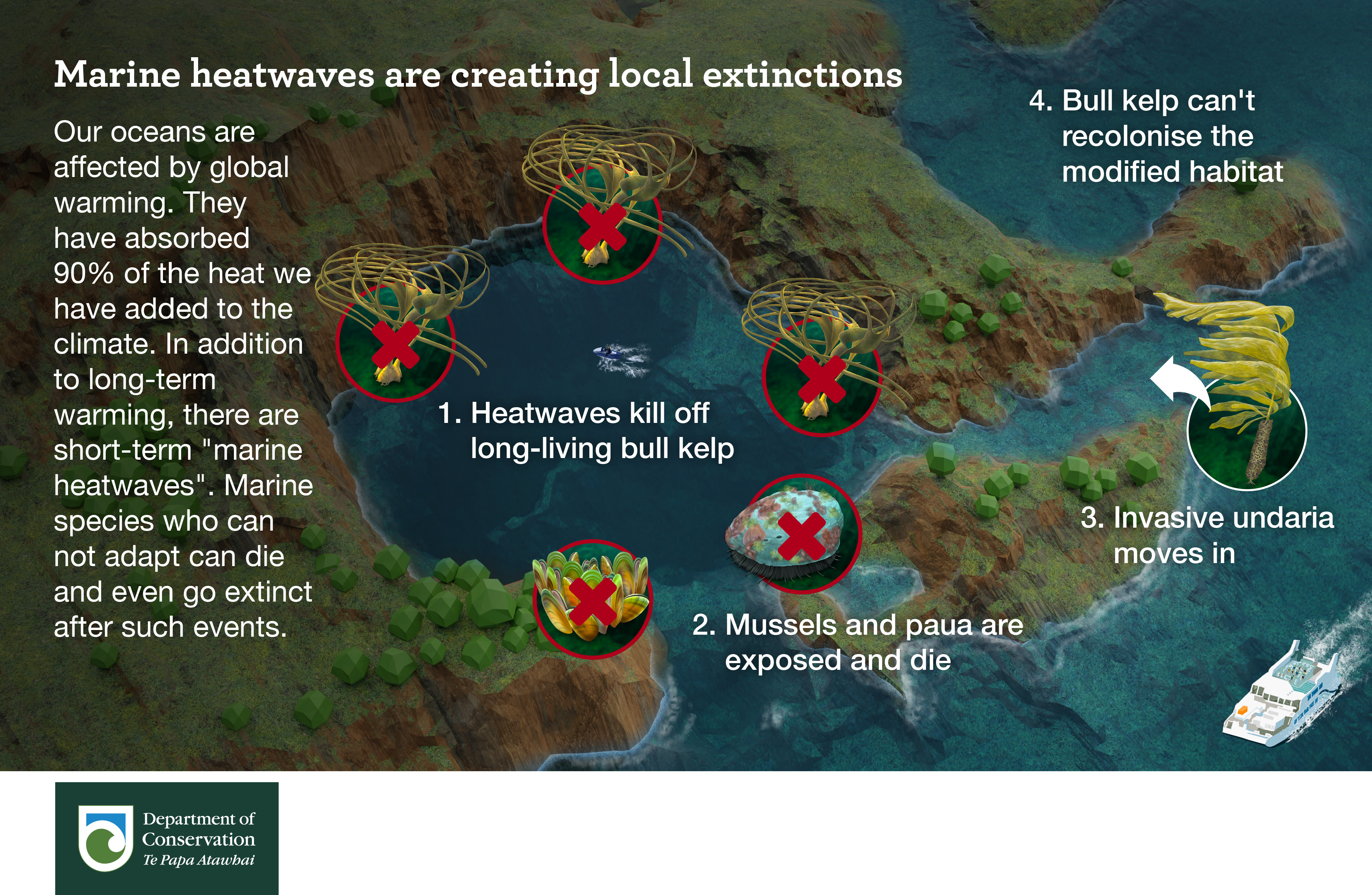

I was only on the island for three nights but I was very fortunate to experience a pristine ridge to reef ecosystem. Seabirds are incredible ecosystem engineers who were an integral part of New Zealand’s inland forests for millions of years. Communities are making small efforts to bring seabirds back to predator free island and mainland sites with no control over seabird food sources. If we really want intact ecosystems we will have to make sure our oceans have enough food for seabirds to feed our forests.