I’m experimenting with mapping the seafloor for restoration projects. This is what I have done so far:

- Record a time-lapse sequence, swim as slow as possible (GoPro Hero 7 Black linear 0.5sec)

- Buy ArgiSoft Metashape Standard (about $300NZD)

- Import photos to chunk

- Align photos (Duplication errors on highest, high best, Reference preselection [sequential], Guided image matching)

- Don’t clean up the point cloud (not needed for orthomosaics)

- Build mesh (use sparse cloud source data, Arbitrary 3D)

- Build texture (Adaptive orthophoto, Mosaic, Enable hole filling, Enable ghosting filter)

- Capture view (hide cameras and other visual aids first then export .PNG at a ridiculous resolution)

ArgiSoft Metashape worked much better than using Adobes photo stitching software (Photoshop & Lightroom) on the same data. But I need more overlapping images as all the software packages were not able to match all of any of the four test sequences I did.

I’m going to test shooting in video next. The frames will be smaller 2704×1520 (if I stick with linear to avoid extra processing for lens distortion) instead of 4000×3000 with the time-lapse but I’m hopping all the extra frames will more than compensate (2FPS=>24FPS).

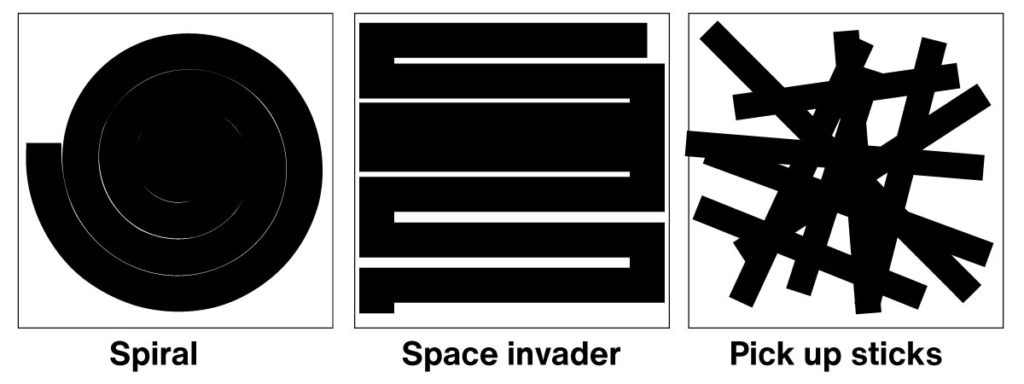

In theory an ROV will be better but I don’t think there are any on the market that know where they are based on what they can see. All the work arounds for knowing where you are underwater are expensive, here are two UWIS & Nimrod. I want to see if we can do this with divers and no location data. I don’t think towing a GPS will be accurate enough to match up the photos but it does seem to work with drones taking images of bigger scenes (I want this to work in 50cm visibility). I expect if I want large complete images the diver will need to follow another diver who has left a line on the seafloor. One advantage of this is that the line could have a scale on it, but I’m hoping to avoid it as the lines will be ugly 😀 So far I can do only two turns before it fails. There are three patterns that might work (Space invaders, Spirals and Pick up sticks). For my initial trials I am focusing on Space invaders.

Video provides lots more frames and the conversion is easy. A land based test with GPS disabled, multiple turns, 2500 photos, 2704 x 2028 linear, space invader pattern at 1.2m from the ground worked perfectly. However I cant get it to work underwater. In every test so far Metashape will only align 50-100 frames. I tried shooting on a sunny day which was terrible as the reflection of the waves dancing on the seafloor confuses the software. But two follow up shoots also failed, when I look at the frames Metashape cant match I just don’t see why its can’t align them. Theses two images are in sequence, one gets aligned and the next one is dropped!

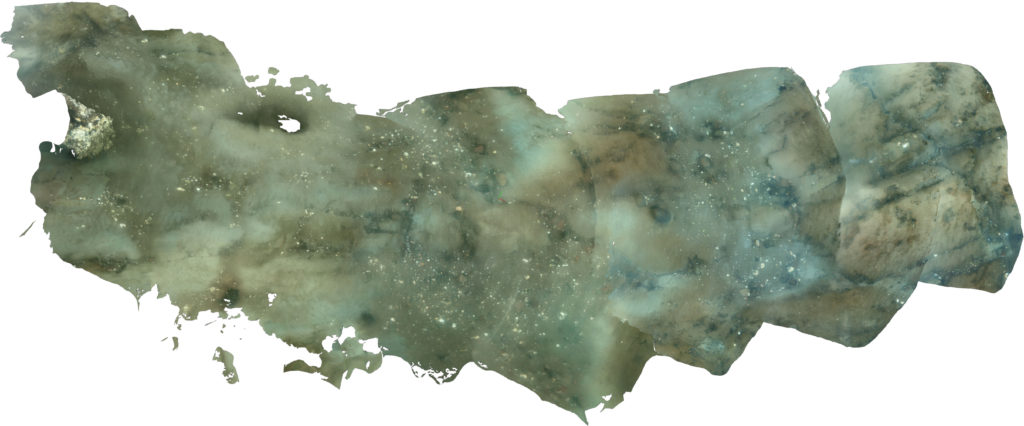

Here is what the test footage looks like, I have increased the contrast.

I have also tried exporting the frames at 8fps to see if the alignment errors are happening because the images are too similar but got similar results (faster).

Detailed advice from Metashape:

Since you are using Sequential pre-selection, you wouldn’t get matching points for the images from the different lines of “space invader” or “pick up sticks” scenarios or from different radius of “spiral” scenario.

If you are using “space invader” scenario and have hundreds or thousands of images, it may be reasonable to align the data in two iterations: with sequential preselection and then with estimated preselection, providing that most of the cameras are properly aligned.

As for the mesh reconstruction – using Sparse Cloud source would give you very rough model, so you may consider building the model from the depth maps with medium/high quality in Arbitrary mode. As for the texture reconstruction, I can suggest to generate it in Generic mode and then in the model view switch the view from Perspective to Orthographic, then orient the viewpoint in the desired way and use Capture View option get a kind of planar orthomosaic projection for your model.

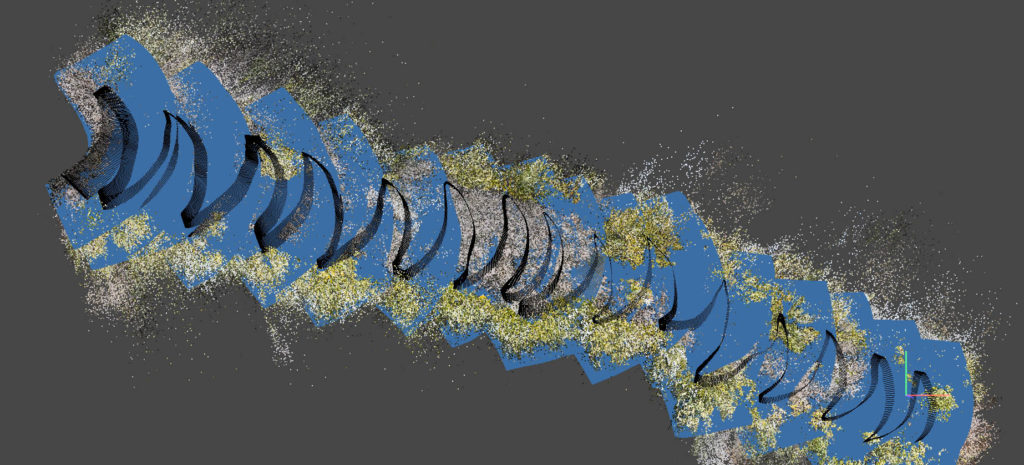

Align ‘sequential’ only ever gets about 5% of the shots. Repeating the alignment procedure on ‘estimated’ picks up the rest but the camera alignment gets curved. I think I have calibrated the cameras to 24mm (it’s hard to see if that has been applied) but it doesn’t seem to change things.

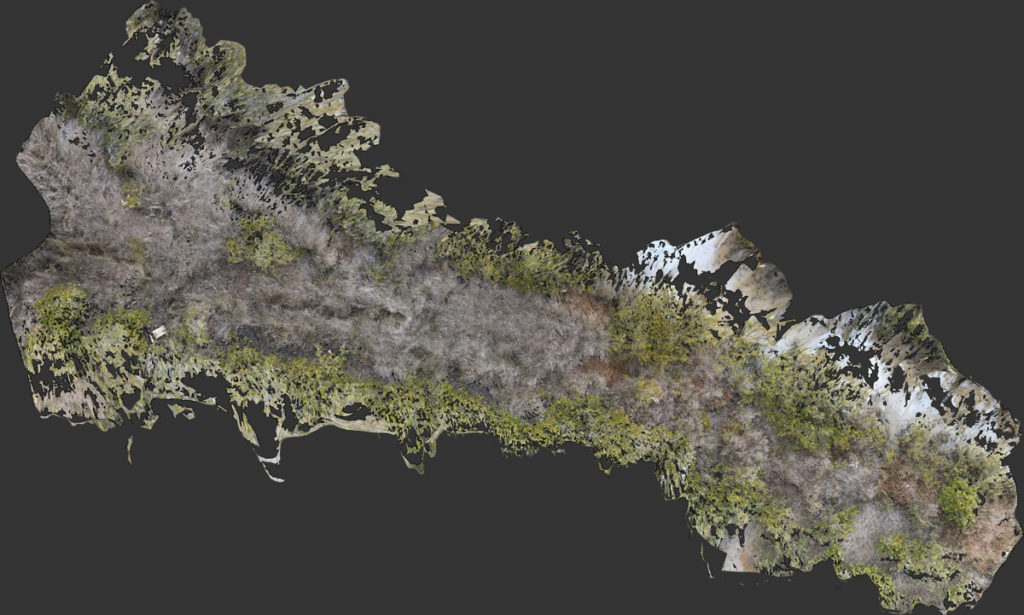

I tried an above water test and made a two minute video of the Māori fish dams at Tahuna Torea. I used the same settings as above, but dropped the quality down to medium. It looks great!

The differences between above and below water are: Camera distance to subject, flotsam, visibility / image quality and colour range. If the footage I am gathering is too poor for Metashape to align it might mean we need less suspended sediment in the water to make the images. That’s a problem as the places I want to map are suffering from suspended sediment – which is why they would benefit from shellfish restoration.

The Agisoft support team are awesome. They processed my footage with f = 1906 in the camera calibration, align photos without using preselection and a 10,00 tie point limit. The alignment took 2.5 days but worked perfectly (click on the image below). There are a few glitches but I think the result is good enough for mapping life on the seafloor. I will refine the numbers a bit and post them in a seperate blog post, wahoo!

Update Jul 2022: Great paper explaining the process with more sophisticated hardware

Final method

Here is my photogrammetry process / settings for GoPro underwater. I am updating them as I learn more. Please let me know if you discover an improvement. Thanks to Vanessa Maitland for her help refining the process.

Step 1: Make a video of the seafloor using a GoPro

- If you’re at less than 5m deep you will need to go on a cloudy day for even light

- Make sure you shoot 4k, Linear. Also make sure the orientation is locked in preferences.

- Record you location and the direction your going to swim if you want to put your orthomosaic on a map

- Swim in a spiral using a line attached to a peg to keep the distance from it even

- Don’t leave any gaps or you will generate a spherical image

Step 2: Edit your video

- There are lots of software packages that will do this, I use Adobe After FX where I can increase the contrast in the footage and add more red light depending on the depth. You might also find it easier to trim your video here but you can also do it in Step 5.

Step 3: Install and launch Agisoft Metashape Standard.

- These instructions are for version 1.8.1

Step 4: Use GPU.

- In ‘Preferences’, ‘GPU’ select your GPU if you have one, I also checked ‘Use CPU when performing accelerated processing’

Step 5: Import video

- From the menu chose: ‘File’, ‘Import’, ‘Import Video’.

- The main setting to play with here is the ‘Frame Step’ We have had success with using every third frame which cuts down on processing time.

- If you have multiple videos you will have to import multiple chunks, then I recommend combining them before processing using ‘Workflow, Merge Chunks’, I have had better results doing this, rather than processing each chunk individually then choosing ‘Workflow, Align Chunks’.

Step 6: Camera calibration

- From the menu chose: ‘Tools’, ‘Camera calibration’

- Change ‘Type’ from ‘Auto’ to ‘Precalibrated’

- Change the ‘f’ value to 1906

Step 7: Align photos

- From the menu chose: ‘Workflow’, ‘Align Photos’

- Check ‘Generic preselection’

- Uncheck ‘Reference preselection’

- Uncheck ‘Reset current alignment’

- Select ‘High’ & ‘Estimated’ from the dropdown boxes

Under ‘Advanced’ chose: - ‘Key point limit’ value to 40,000

- ‘Tie point limit’ value to 10,000

- Uncheck ‘Guided image matching’ & ‘Adaptive camera model fitting’

- Leave ‘Exclude stationary tie points’ checked

If 100% of cameras are not aligned then try Step 8 otherwise skip to Step 9.

Step 8: Align photos (again)

- From the menu chose: ‘Workflow’, ‘Align Photos’

- Uncheck ‘Generic preselection’

- Check ‘Reference preselection’

- Uncheck ‘Reset current alignment’

- Select ‘High’ & ‘Sequential’ from the dropdown boxes

Under ‘Advanced’ chose: - ‘Key point limit’ value to 40,000

- ‘Tie point limit’ value to 4,000

- Uncheck ‘Guided image matching’ & ‘Adaptive camera model fitting’

- Leave ‘Exclude stationary tie points’ checked

Now all the photos should be aligned, if not repeat step 7 & 8 with higher settings and check ‘Reset current alignment’ on step 7 only. I have been happy with models that have 10% of photos not aligned.

Step 9: Tools / Optimize Camera Locations

Just check the check boxes below (default settings):

- Fit f, Fit k1, Fit k2, Fit k3

- Fit cx, cy, Fit p1, Fit p2

Leave the other checkboxes (including Advanced settings) unchecked.

Step 10: Resize region

Use the region editing tools in the graphical menu make sure that the region covers all the photos you want to turn into a 3D mesh. You can change what is being displayed in the viewport under ‘Model’, ‘Show/Hide Items’.

Step 11: Build dense cloud

- Quality ‘High’

- Depth filtering ‘Moderate’

- Uncheck all other boxes.

Step 12: Build mesh

- From the menu choose: ‘Workflow’, ‘Build Mesh’

- Select ‘Dense cloud’, ‘Arbitrary’

- Select ‘Face count’ ‘High’

- Under ‘Advanced’ leave ‘Interpolation’ ‘Enabled’

- Leave ‘Calculate vertex colours’ checked

Step 13: Build texture

- From the menu choose: ‘Workflow’, ‘Build Texture’

- Select ‘Diffuse map’, ‘Images’, ‘Generic, ‘Mosaic (default)’ from the dropdown menus.

- The texture size should be edited to suit your output requirements. The default is ‘4096 x 1’

- Under ‘Advanced’ Turn off ‘Enable hole filling’ & ‘Enable ghosting filter’ if you’re using the image for scientific rather than advocacy reasons.

Step 14: Export orthomosaic

You can orientate the view using the tools in the graphical menu. Make sure the view is in orthographic before you export the image (5 on the keypad). Then chose ‘View’, ‘Capture View’ from the menu. The maximum pixel dimensions are 16,384 x 16,384. Alternatively you can export the texture.

==

Let me know if you have experimented converting timelaspe / hyperlapse video to photogrammetry. There may be some advantages.